Artificial Intelligence: The New Frontier in Medical Education

Medical faculty and educators examine the impact of technology on medical education and its role in healthcare.

AI-powered tools could revolutionize healthcare and medical education. If used judiciously, they can ease the pressures on the healthcare system posed by new and existing chronic diseases, limited resources and rising patient demand.

If medicine were only a science then technology might be the final answer. But it is not. “Medicine will always be an art and a science, even in the age of AI,” maintains Dean of the Gilbert and Rose-Marie Chagoury School of Medicine Sola Aoun Bahous. There are ethical and safety issues to consider, personalized care, privacy control and liability.

The impact of AI on medical education was the subject of an interdisciplinary seminar on May 28 organized by the Continuing Medical Education (CME Office) at the school that explored the importance of integrating AI applications into medical schools in learning, instruction and assessment.

“LAU is committed to providing high-quality education in the evolving educational landscape,” said Clinical Professor in the Department of Anesthesiology and Associate Dean for Faculty Affairs and Development Vanda Abi Raad. “By embracing technology and fostering innovation, the university aims to leverage AI to enhance medical education and empower future healthcare professionals.”

Dean Bahous was joined in the seminar by Dr. John (Jack) Boulet, senior scholar at the University of Illinois at Chicago, College of Medicine, adjunct professor of medicine at the F. Edward Hebert School of Medicine, Uniformed Services University, and consultant for LAU’s school of medicine, as well as Dr. Barbar Akle, associate provost for International Education and Programs.

Dr. Jordan Srour, assistant provost for Educational Resources and Innovation, and Dr. Nazih Youssef, program director of Urology Residency, Division head of Urology and director of Assessment moderated the discussions.

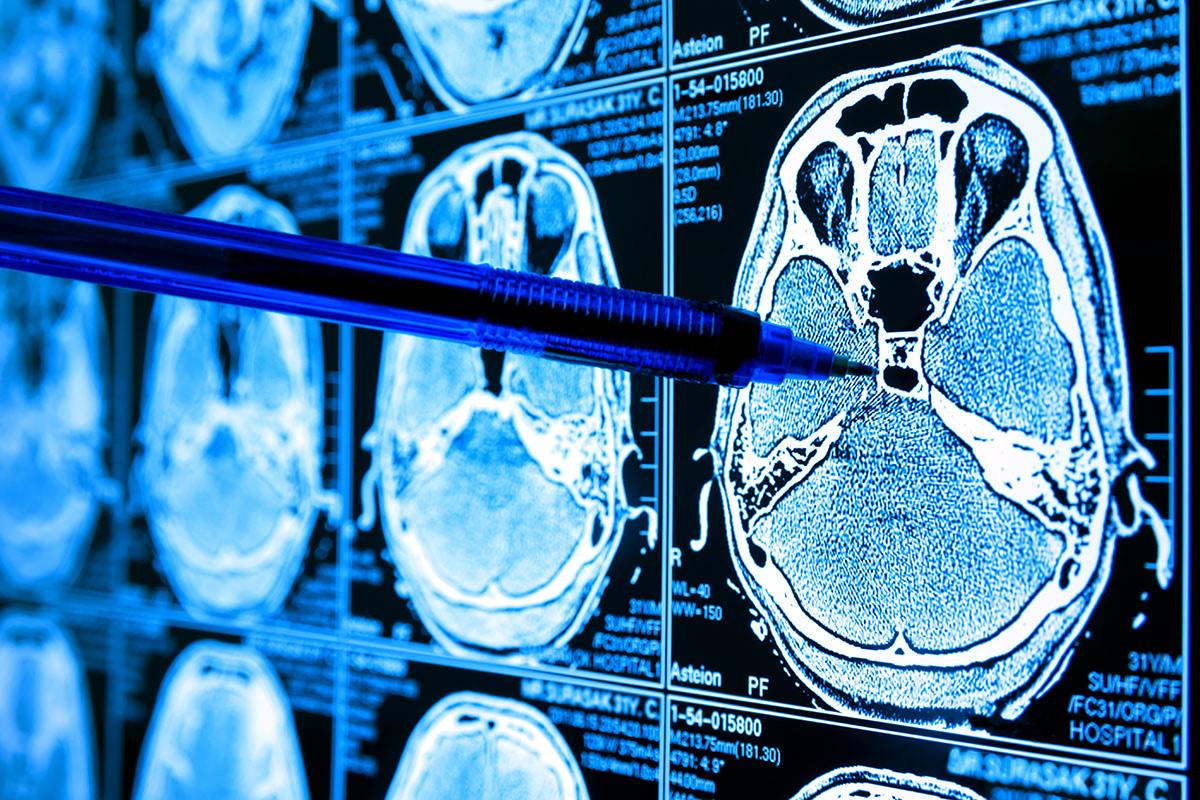

AI models work off a neural network, simulating the human brain, consisting of a series of algorithms that teach a computer how to process data. In the case of an electrocardiogram (ECG), for instance, the neural network processes an ECG based on previous graphs that had been input as data. Yet there is no way of knowing what that data are.

The information that physicians would obtain therefore depends on the data the models are trained on. “If the data is skewed, unfair or incomplete, the model will inherit those flaws and amplify their predictions,” explained Dr. Akle. “Garbage in, garbage out.”

Nor can one know how an AI model arrives at a conclusion or decision, a phenomenon referred to as the Black Box due to the complexity of a model.

For this reason, says Dr. Bahous, it is difficult to trust a system if one does not understand how it works. “The physician needs to understand the inputs and the algorithm and interpret the AI-proposed diagnosis to ensure no errors are made,” which would require some computational knowledge.

This does not mean that medical students should be trained as computer programmers or data analysts, but they should be taught to appraise an algorithm as they would a research paper, added Dr. Bahous.

They should have a solid understanding of the 4Vs of big data (volume, variety, velocity and veracity), how it is aggregated, analyzed and personalized in the context of decision-making, and learn to interpret AI-proposed diagnoses to ensure no errors are made.

A major concern with AI-powered tools is privacy and control over data. What happens, for instance, if a patient were to withdraw consent to participate in a study where their information was used in algorithm development? asked Dr. Bahous. In the European Union, the Right to be Forgotten law gives any individual, and in this case the patient, the right to be erased from data in compliance with the General Data Protection Regulation. In AI, there is no guarantee that a patient’s privacy will be protected in data sharing, and any cybersecurity attack could tamper with the algorithm resulting in the misclassification of medical information.

However, from an assessment point of view, computer technology is not the problem but the solution for test administration and scoring, said Dr. Boulet. Automated Item Generation (AIG) – the process of using models to generate test items with the aid of computer technology – is a way to the future as it saves time and resources.

“AIG,” he explained, “uses a three-stage process for generating items where the cognitive mechanism required to solve the item is identified and manipulated using computer technology to create new items.”

Furthermore, AI eliminates bias in grading and provides automated feedback on performance. Though AI assessment is not yet a perfect system, it can help medical educators become more efficient.

Physicians can, and in fact should, harness the powers that AI provides, but they must maintain agency. Oversight policies on AI development need to be initiated, especially for the sake of healthcare, as machine learning algorithms gain popularity in decision-making.

Eventually, while technology might not displace physicians, it may very well displace those who do not use it.